Now that Amazon’s Elastic Block Store (EBS) is publicly available, running a complete Django installation on Amazon Web Services (AWS) is easier than ever.

Why EBS? EBS provides persistent storage, which means that the Django database is kept safe even after the Django EC2 instances terminate.

This tutorial will take you through all the necessary steps for setting up Django with a persistent PostgreSQL database on AWS. I will be assuming no prior knowledge of AWS, so those of you who have dabbled with it before might want to skim through the first steps. Knowing your way around Django is an advantage but not a requirement.

I am deliberately keeping things simple—to get a deeper understanding of the hows and whys of AWS you should take a look at James Gardner’s excellent article as well as the official documentation.

The command line tools can be a bit intimidating so I will also show you how Elasticfox can be a fully satisfactory alternative.

Summary

We are going to register with AWS, get acquainted with Elasticfox, start up an EC2 instance, install Django and PostgreSQL on the instance, and finally mount an EBS drive and move our database to it.

Step 1: Set up an AWS account

To use AWS you need to register at the AWS web page. If you already have an account with Amazon you can extend this to also cover AWS.

Step 2: Download and install the Elasticfox Firefox extension

This tool will make life a whole lot easier for you. Down the road there is no avoiding the official command line tools or alternatively boto if you want to access AWS programmatically. For now, let’s stick with Elasticfox.

You can install the extension from this page.

Step 3: Add your AWS credentials to Firefox

Launch Elasticfox (‘Tools’ -> ‘Elasticfox’) and click on the ‘credentials’ button. Enter your account name (typically the email address you registered with), AWS access key and AWS secret access key. This information can be found via the ‘Your web services account’ on the AWS start page.

Step 4: Create a new EC2 security group

Let’s pause for a while to consider what we are doing.

You will be running your Django installation off an EC2 instance. There is no magic to them at all—they are simply fully functional servers that you access the same way as, say, a dedicated server or a web hosting account.

By default, EC2 instances are an introverted lot: They prefer keeping to themselves and don’t expose any of their ports to the outside world. We will be running a web application on port 8000 so therefore port 8000 has to be opened. (Normally we would be opening port 80, but since I will only be using the Django development web server then port 8000 is preferable). SSH access is also essential, so port 22 should be opened as well.

To make this happen we must create a new security group where these ports are opened.

Click on the ‘Security Groups’ tab and then the ‘Refresh’ icon. The list should update to show you the ‘default’ group.

Then click the ‘Create Security Group’ icon and create a new group named ‘django’.

Now we need to add the actual permissions. Click the ‘Grant Permission’ icon and add ‘From port 8000 to 8000’ under ‘Protocol Details’. Repeat the same action for port 22.

Your security group is now ready for use.

Step 5: Set up a key pair

Having a security group is not enough; we also have to set up a key pair to access the instance via SSH.

Why is this necessary? Think about it: You are launching a server instance but no one has told you the root password. So, setting up a private/public key pair is the only way to gain access.

Click on the ‘KeyPairs’ tab and then the ‘Create a new keypair’ icon. Name your new key pair ‘django-keypair’. A save dialog will pop up, allowing you to save the private key in a safe location. Use the filename ‘django.pem’.

Step 6: Launch an EC2 instance

I have a certain fondness for Fedora, so I’ll be using the fedora-8-i386-base-v1.07 AMI with AMI ID ami-2b5fba42.

Return to the ‘AMIs and Instances’ tab.

If you click the ‘Refresh’ icon in the ‘Machine Images’ section you will get a list of all public images. To find the one we’re after, enter ‘fedora-8’ in the search box—after a while all the relevant images will appear.

Right-click the image with the AMI ID as above and select ‘Launch instance(s) of this AMI’.

This is where the actions from the previous steps start making sense. Set the key pair to ‘django-keypair’ and add the ‘django’ security group to the launch set. Leave all the other settings as they are. Then click the ‘Launch’ button.

Important: From this point and on the meter will be running! If the fire alarm goes off, you get bored with this tutorial, or whatever: Do remember to shut down the instance before you leave, otherwise it will cost you $2.40 per day.

The ‘Your Instances’ section should update, showing you that the instance you just launched is ‘pending’. Click the ‘Refresh’ icon after a while—in a minute or so the status should change to ‘running’.

Step 7: Connect with your new instance

Double click on the running instance and copy the ‘Public DNS Name’ entry. This is the domain name you use to access the instance from the outside. In this tutorial, my instance is hosted at ‘ec2-75-101-248-101.compute-1.amazonaws.com’.

Now we are going to SSH into the instance. I am doing this via Cygwin on Windows, but any SSH client should do. If you are on Windows and have Putty installed you can even launch directly from Elasticfox by right-clicking on the running instance and selecting ‘SSH to Public DNS Name’.

Let’s start with a basic sanity check:

$ ssh root@ec2-75-101-248-101.compute-1.amazonaws.com

The authenticity of host 'ec2-75-101-248-101.compute-1.amazonaws.

com (75.101.248.101)' can't be established.

RSA key fingerprint is db:0a:85:36:99:5f:65:6b:c7:77:3e:37:59:fc:16:fd.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'ec2-75-101-248-101.compute-1.amazonaws.

com,75.101.248.101' (RSA) to the list of known hosts.

Permission denied (publickey,gssapi-with-mic).

As expected, this isn’t working; we need to use the private key you saved earlier. Go to the directory where you saved the django.pem file and type the following:

$ ssh -i django-keypair.pem root@ec2-75-101-248-101.compute-1.amazonaws.com

__| __|_ ) Fedora 8

_| ( / 32-bit

___|\___|___|

Welcome to an EC2 Public Image

: -)

Base

[root@ ~]#

That’s better!

If you try pointing your browser towards ‘http://ec2-75-101-248-101.compute-1.amazonaws.com:8000/’ you should get a ‘can’t establish a connection’ error since there is no web server running on port 8000 as of yet.

Step 8: Install required software

Most AMI instances are stripped to the bone, so we have to add the software packages we need to get Django up and running. The steps required will of course vary from AMI to AMI, but running the following script as root is sufficient for our v1.07 Fedora 8 instance:

# Install subversion

yum -y install subversion

# Install, initialize and launch PostgreSQL

yum -y install postgresql postgresql-server

service postgresql initdb

service postgresql start

# Modify PostgreSQL config to avoid username/password problems

# Note: This grants access to _all_ local traffic!

cat > /var/lib/pgsql/data/pg_hba.conf <<EOM

local all all trust

host all all 127.0.0.1/32 trust

EOM

# Restart PostgreSQL to enable new security policy

service postgresql restart

# Set up a database for Django

psql -U postgres -c "create database djangotest encoding 'utf8'"

# Install Django (I always checkout from SVN)

cd /opt

svn co http://code.djangoproject.com/svn/django/trunk/ django-trunk

ln -s /opt/django-trunk/django /usr/lib/python2.5/site-packages/django

ln -s /opt/django-trunk/django/bin/django-admin.py /usr/local/bin

# Install psycopg2 (for database access from Python)

yum -y install python-psycopg2

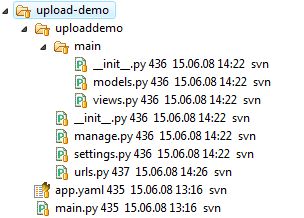

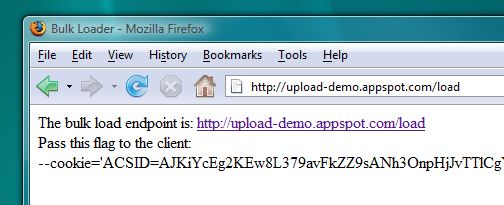

Step 9: Set up a Django project

First we set up an account for our test Django project:

[root ~]# useradd djangotest

[root ~]# su - djangotest

[djangotest ~]$

For the full story on how to create a new Django project you should have a look at the official tutorial. For now, just execute the following as the ‘djangotest’ user:

[djangotest ~]$ django-admin.py startproject mysite

Now we have all we need to test if the installation is working. Launch the development server like this:

[djangotest ~]$ python mysite/manage.py runserver ec2-75-101-248-101.compute-1.amazonaws.com:8000

Validating models...

0 errors found

Django version 1.0-beta_1-SVN-8461, using settings 'mysite.settings'

Development server is running at http://ec2-75-101-248-101.compute-1.amazonaws.com:8000/

Quit the server with CONTROL-C.

Note that I am using the full external domain name with the ‘runserver’ command.

Visit ‘http://ec2-75-101-248-101.compute-1.amazonaws.com:8000/’ with your browser and you should see the regular Django ‘It worked!’ page.

Note: Please don’t use the Django development server in a production setting. In fact, you probably shouldn’t use it on anything that is exposed to the outside world. The only reason I am doing it this way in this tutorial is to keep things simple—normally you should set up a proper web server such as Apache or Lighttpd. Refer to the Django documentation for information on how to do this.

Step 10: Create a Django application

I will show you how to put the Django database in persistent storage later on, so we have to set up a simple database-backed Django application.

Modify mysite/settings.py as follows:

DATABASE_ENGINE = 'postgresql_psycopg2'

DATABASE_NAME = 'djangotest'

DATABASE_USER = 'postgres'

DATABASE_PASSWORD = ''

...

INSTALLED_APPS = (

'django.contrib.admin',

'django.contrib.auth',

...

Then modify mysite/urls.py to allow access to the admin GUI:

from django.conf.urls.defaults import *

# Uncomment the next two lines to enable the admin:

from django.contrib import admin

admin.autodiscover()

urlpatterns = patterns('',

# Example:

# (r'^mysite/', include('mysite.foo.urls')),

# Uncomment the next line to enable admin documentation:

# (r'^admin/doc/', include('django.contrib.admindocs.urls')),

# Uncomment the next line to enable the admin:

(r'^admin/(.*)', admin.site.root),

)

Now we have to sync the database:

[djangotest ~]$ python mysite/manage.py syncdb

You will be asked to create an admin user—set both the username and the password to ‘djangotest’.

Then create a Django app:

[djangotest ~]$ python mysite/manage.py startapp myapp

If you got the preceding steps right, you should now be able to log on to the admin GUI at http://ec2-75-101-248-101.compute-1.amazonaws.com:8000/admin/ with the ‘djangotest’ user.

Add a new user to verify that the database connection works—we will be needing that new user later on.

Step 11: Create and mount an EBS instance

This is where things get really cool!

There is a huge problem with our current setup: Once you shut down the AMI instance, all the data in our database will disappear. Enter EBS.

EBS lets you define a persistent storage volume that can be mounted by EC2 instances. If we move our database files to an EBS volume then they will persist no matter what happens to our EC2 instances.

First, go back to Elasticfox and make a note of the availability zone of your running instance—this should be something like ‘us-east-1b’.

Then click on the ‘Volumes and Snapshots’ tab. Click the ‘Create Volume’ icon and create a 1GB volume that belongs to the same availability zone as your instance.

Right-click the new volume and choose ‘Attach this volume’. This will let you attach the volume to the running instance. Use /dev/sdh as the mount point. Refresh after a couple of seconds and the ‘Attachment status’ should have changed to ‘attached’.

Go back to your terminal and create an ext3 filesystem on the new volume:

[root ~]# mkfs.ext3 /dev/sdh

mke2fs 1.40.4 (31-Dec-2007)

/dev/sdh is entire device, not just one partition!

Proceed anyway? (y,n) y

Filesystem label=

OS type: Linux

Block size=4096 (log=2)

Fragment size=4096 (log=2)

131072 inodes, 262144 blocks

13107 blocks (5.00%) reserved for the super user

First data block=0

Maximum filesystem blocks=268435456

8 block groups

32768 blocks per group, 32768 fragments per group

16384 inodes per group

Superblock backups stored on blocks:

32768, 98304, 163840, 229376

Writing inode tables: done

Creating journal (8192 blocks): done

Writing superblocks and filesystem accounting information: done

This filesystem will be automatically checked every 35 mounts or

180 days, whichever comes first. Use tune2fs -c or -i to override.

All that remains is to mount the filesystem, in this case to /vol:

[root ~]# echo "/dev/sdh /vol ext3 noatime 0 0" >> /etc/

fstab

[root ~]# mkdir /vol

[root ~]# mount /vol

[root ~]# df --si

Filesystem Size Used Avail Use% Mounted on

/dev/sda1 11G 1.4G 8.8G 14% /

/dev/sda2 158G 197M 150G 1% /mnt

none 895M 0 895M 0% /dev/shm

/dev/sdh 1.1G 35M 969M 4% /vol

Step 12: Moving the database to persistent storage

First make sure that PostgreSQL is stopped:

[root ~]# service postgresql stop

Stopping postgresql service: [ OK ]

You should also terminate your Django development server in case it is still running.

Now move the PostgreSQL database files to the EBS volume mounted at /vol:

[root ~]# mv /var/lib/pgsql /vol

For this to work we have to make a small modification to the /etc/init.d/postgresql file—make sure that the lines starting at around line 100 look exactly like this:

...

# Set defaults for configuration variables

PGENGINE=/usr/bin

PGPORT=5432

PGDATA=/var/lib/pgsql

if [ -f "$PGDATA/PG_VERSION" ] &amp;&amp; [ -d "$PGDATA/base/template1" ]

then

echo "Using old-style directory structure"

else

PGDATA=/var/lib/pgsql/data

fi

PGDATA=/vol/pgsql/data

PGLOG=/vol/pgsql/pgstartup.log

...

Note that this is a Fedora-specific hack—the main idea is to have the $PGDATA system variable point at /vol/pgsql/data.

For other databases the procedure will differ. A similar procedure for MySQL is available here.

PostgreSQL can now be restarted:

[root ~]# service postgresql start

Starting postgresql service: [ OK ]

To verify that Django is using the same database as before you can revisit the admin GUI—the new user you added previously should still be available.

And there you have it!

Step 13: Shutting down

For completeness’ sake, let’s review the steps required to shut everything down.

First, stop the database server and unmount the EBS volume:

[root ~]# service postgresql stop

Stopping postgresql service: [ OK ]

[root ~]# umount /vol

Then return to Elasticfox, right-click the EBS volume and select ‘Detach this instance’. When you are done with this tutorial you can delete the volume instance as well—having it in storage will cost you money.

Finally, go to the ‘AMIs and Instances’ tab and terminate the running instance. That should conclude your current transaction with AWS. (Refresh the volume and instances sections to verify that everything has really shut down).

Final words

If you now repeat steps 6 to 11 you should be able to launch a brand new EC2 instance that uses the database on your stored volume—this is left as an exercise for the reader. The only deviations from the procedure are that you shouldn’t have to run the PostgreSQL ‘initdb’ command, or create the ‘djangotest’ database.

This has been a bare-bones introduction to how EBS lets you run a persistent Django installation on AWS. In real life, the following issues have to be considered:

- Use a proper web server.

- Make sure the web server log files, database log, django logs etc. are moved to persistent storage as well.

- Create a custom AMI that is properly set up for your Django project (so that you don’t have to do the full setup procedure every time you launch an instance).

Then there’s scaling, backup, and so on. Nonetheless, hopefully this article should be enough to get you started.

Addendum

A reader pointed out that the PostgreSQL user home directory should also be changed. While I haven’t tried this myself, the correct procedure is probably to do a usermod -d /vol/pgsql postgres as root.