A reader posed the following question:

“I’m trying to convert my django app to work with google app engine. This is preferred rather than spending $100/year extra for a site with ssh access, plus I love the appengine dashboard.

Here is my issue: My current django app is fairly static. It pulls all its data from a mysql database containing ~6,000 rows. This itself is built from a gadfly database, so it should be pretty easy to get these values into the datastore/gql.

How can I sync my database with appengine?”

This is a highly relevant problem if you are porting an existing Django application to the Google App Engine. Luckily, the App Engine SDK includes a bulk data uploader tool that does the job. Let’s work through an example where we use this tool to transfer data from an existing MySQL database onto a Django application running on Google App Engine.

Case description: We have an inventory database that is currently stored in MySQL. This database is to be made available through a Django web application that allows visitors to review the inventory. The database is named ‘customerdb’ and has a single table called ‘inventory’:’

mysql> select * from inventory; +----------+----------+ | name | quantity | +----------+----------+ | ham | 2 | | cheese | 7 | | macaroni | 1 | +----------+----------+ 3 rows in set (0.00 sec)

Setup: We need an App Engine-ready Django application that provides us with the views and models we need to display our inventory. For this scenario we will name the application ‘upload-demo’ and make it available on http://upload-demo.appspot.com. My earlier tutorials should provide you with what you need to build the basic application structure.

The full set of application files can be downloaded here. References to the application name and paths will have to be changed according to your system setup.

Once the fundamentals are in place you should add an inventory model that mirrors the table in our database:

# upload-demo/uploaddemo/main/models.py

from google.appengine.ext import db

class Inventory(db.Model):

name = db.StringProperty()

quantity = db.IntegerProperty()

We also need a view that displays the data:

# upload-demo/uploaddemo/main/views.py

from django.http import HttpResponse

from uploaddemo.main.models import Inventory

def main(request):

result = ""

items = Inventory.all()

for item in items:

result += "%s: %i<br/>" % (item.name, item.quantity)

return HttpResponse(result)

Finally, your urls.py should point towards the view:

# upload-demo/uploaddemo/urls.py

from django.conf.urls.defaults import *

urlpatterns = patterns("",

(r"^$", "uploaddemo.main.views.main"),

)

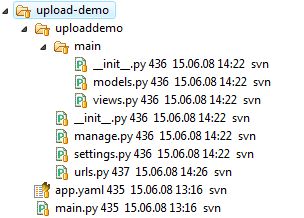

The application directory structure should look exactly like this:

To verify that we are good to go, deploy the application to App Engine:

[test@mybox ~]$ appcfg.py update upload-demo

You should see an empty page—which makes sense since we have no data yet.

Step 1 – Create a bulk load handler: The bulk loader accepts CSV-formatted data which it will feed it into the datastore:

# upload-demo/loader.py

from google.appengine.ext import bulkload

class InventoryLoader(bulkload.Loader):

def __init__(self):

fields = [

("name", str),

("quantity", int)

]

bulkload.Loader.__init__(self, "Inventory", fields)

if __name__ == "__main__":

bulkload.main(InventoryLoader())

In this case we have created a loader for the Inventory model where the fields match the name and type of the fields in the model. Note that the loader is kept outside of the Django application.

Step 2 – Add the handler to the project: This is done by adding an entry to app.yaml that references loader.py:

# upload-demo/app.yaml application: upload-demo version: 1 runtime: python api_version: 1 handlers: - url: /load script: loader.py login: admin - url: /.* script: main.py

A login will be required to access the loader URL—we don’t want anyone to add to our inventory without permission.

Step 3 – Convert the data to CSV:

Getting this step right can be surprisingly tricky, depending on your legacy database. For MySQL you may have to make sure that the user account has file write access rights:

[root@mybox ~]# mysql -u root Welcome to the MySQL monitor. Commands end with ; or \g. Your MySQL connection id is 74740 Server version: 5.0.45 Source distribution Type 'help;' or '\h' for help. Type '\c' to clear the buffer. mysql> grant file on *.* to 'test'@'localhost'; Query OK, 0 rows affected (0.01 sec) mysql> flush privileges; Query OK, 0 rows affected (0.01 sec)

This command might have to be run as root, depending on how your database is configured. To do the data dump we run the following select statement:

[test@mybox ~]$ mysql -u test customerdb -e "select * into

outfile '/tmp/inventory.txt' fields terminated by ',' from

inventory"

[test@mybox ~]$ cat /tmp/inventory.txt

ham,2

cheese,7

macaroni,1

If you are using PostgreSQL you can achieve the same by using the COPY command.

Step 4 – Upload the data: First, redeploy your application to App Engine:

[test@mybox ~]$ appcfg.py update upload-demo

We then use the bulkload_client.py script to upload our CSV file. The script is found in the tools folder of your App Engine installation—you may have to add it to your PATH. Note that you have to use double dashes for the parameters.

[test@mybox ~]$ bulkload_client.py --filename=/tmp/inventory.txt

--kind=Inventory --url=http://upload-demo.appspot.com/load

INFO 2008-06-15 07:39:21,682 bulkload_client.py]

Starting import; maximum 10 entities per post

INFO 2008-06-15 07:39:21,684 bulkload_client.py]

Importing 3 entities in 29 bytes

ERROR 2008-06-15 07:39:21,997 bulkload_client.py]

An error occurred while importing: Received code 302: Found

<HTML><HEAD><meta http-equiv="content-type" content="text/html;charset=utf-8">

<TITLE>302 Moved</TITLE></HEAD><BODY>

<H1>302 Moved</H1>

The document has moved

<A HREF="https://www.google.com/accounts/ServiceLogin?service=ah&

continue=http://upload-demo.appspot.com/_ah/login%3Fcontinue%3Dhttp://

upload-demo.appspot.com/load<mpl=gm&ahname=Django+data+u

pload+demo&sig=f9861d41d527e55f15742b8d54504bcc">here</A>.

</BODY></HTML>

ERROR 2008-06-15 07:39:21,997 bulkload_client.py] Import failed

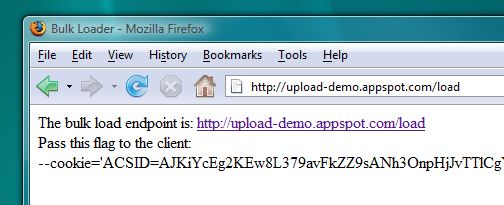

Now, that didn’t work. Remember that app.yaml says we have to authenticate ourself as an admin user before we can upload data. Try visiting http://upload-demo.appspot.com/load in a web browser. After having authenticated yourself using your Google account you will be redirected to the following page:

Just what we needed! Add the cookie string parameter to the previous request and try again:

[test@mybox ~]$ bulkload_client.py --filename=/tmp/inventory.txt

--kind=Inventory --url=http://upload-demo.appspot.com/load

--cookie='ACSID=AJKiYcE[...]1Hh4'

INFO 2008-06-15 07:50:58,541 bulkload_client.py]

Starting import; maximum 10 entities per post

INFO 2008-06-15 07:50:58,549 bulkload_client.py]

Importing 3 entities in 29 bytes

INFO 2008-06-15 07:50:59,102 bulkload_client.py]

Import succcessful

If you visit http://upload-demo.appspot you should now see the data we just uploaded.

Final notes: This simple example should be enough to get you started. When converting real-life databases you will have to deal with more complex schemas with references between tables. The discussion here should point you in the right direction. You may also find the SDK documentation on types and property classes useful when porting your legacy database.